Detecting illegal and offensive content with AI

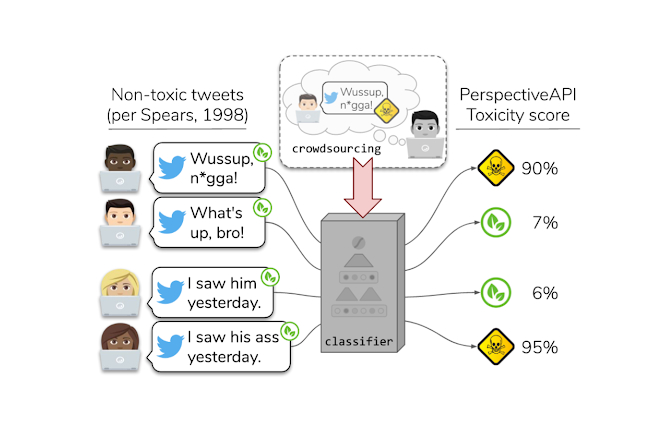

Photo by the authors of The Risk of Racial Bias in Hate Speech Detection

In May 2022 the European Commission presented an EU draft law on mandatory chat control in order to more efficiently fight against “child pornography”.

The proposed legislation would require tech companies in Europe to scan their platforms and products for child pornography in a fully automated way and report their findings to law enforcement. The draft legislation is also proposing using AI to detect patterns of language associated with grooming (befriending and establishing an emotional connection with a child to lower the child’s inhibitions with the objective of sexual abuse).

However, critics are pointing out that these AI-based scanning technologies are ineffective and that the proposed chat control threatens to endanger digital privacy of communications, destroy secure encryption and could result in the mass surveillance of private photos. They warn that this proposal is a giant step towards a Chinese-style surveillance state.

While AI can certainly be used for text classification, the use of AI for detection of illegal and offensive content is far from being reliable. A study that analyzed racial bias in widely-used corpora of annotated toxic language from 2019 has shown that leading AI models are 1.5 times more likely to flag tweets written by African Americans as “offensive” compared to other tweets.

The same is true for detecting nudity. Experts are pointing out that training AI to recognize nudity is a difficult task. When a popular microblogging and social networking website Tumblr in 2018 started to ban pornographic content, their AI system was highly innacurate and has been flagging a lot of innocent posts as an adult content.

Detecting child pornography is even harder. According to the Swiss Federal Police, 87% of the reports of illegal child pornography content they receive are criminally irrelevant.

The study of Imperial College London from last year also found out that AI algorithms that detect illegal images on devices (so-called perceptual hashing based client-side scanning (PH-CSS) algorithms) can be easily fooled with small, invisible changes to images. The researchers who tested the robustness of five similar algorithms, found that small changes in image’s unique signature would cause more than 99.9% detection avoidance while preserving the content of the image. They concluded that detecting illegal content like Child Sexual Abuse Material is not effective and not proportional.

While AI can certainly be helpful for criminal investigation, it should not replace the human decision. AI is not omnipotent – it could be unfair, biased and increase discrimination, it could give us false sense of reliability and it often lacks transparency.

Authors: Matej Kovačič

Links:

EU chat control bill: fundamental rights terrorism against trust, self-determination and security on the Internet, https://www.patrick-breyer.de/en/eu-chat-control-bill-fundamental-rights-terrorism-against-trust-self-determination-and-security-on-the-internet/

The Risk of Racial Bias in Hate Speech Detection, https://homes.cs.washington.edu/~msap/pdfs/sap2019risk.pdf

Porn: you know it when you see it, but can a computer? https://www.theverge.com/2019/1/30/18202474/tumblr-porn-ai-nudity-artificial-intelligence-machine-learning

Proposed illegal image detectors on devices are ‘easily fooled’, https://www.imperial.ac.uk/news/231778/proposed-illegal-image-detectors-devices-easily/

Adversarial Detection Avoidance Attacks: Evaluating the robustness of perceptual hashing-based client-side scanning, https://www.usenix.org/conference/usenixsecurity22/presentation/jain